By Micky Tripathi, President and CEO

Massachusetts eHealth Collaborative

This column is about a security incident that my company caused earlier this year. It’s a long and detailed description. I’m confident that those of you have never been through a security incident will find the article tedious. I invite you to read just the ending on lessons learned, or save your hard-earned free time for one of HIStalk’s other excellent columns.

I’m equally confident that those of you who are going through a security incident (or have been through one) will find the details fascinating, not only because misery loves company, but also because you realize through hard experience that protecting privacy and security is about incredible attention to the small stuff. My experience is that large organizations know this because they pay professionals to worry about it, but most small practices have little to no idea of the avalanche that can follow from the simple loss of a laptop. Anyway, here it goes.

We had a security breach a few months ago. One of our company laptops was stolen from an employee’s car. As luck would have it, the laptop contained some patient data files.

My inner Kubler-Ross erupted.

- Denial. Noooooooooooooooooooo!!! This is surely a nightmare and I’m going to wake up any minute.

- Anger. How dare someone steal our property??!! Who the heck would leave a company laptop unattended in a car parked in a city neighborhood??!!

- Bargaining. Are you sure it was OUR laptop?? Maybe it didn’t have any patient data on it?

- Depression. We’re doomed. Patients’ privacy may be exposed. Some may suffer real harm or embarrassment. They’re going to hate their providers, and their providers are going to hate us. Word will spread, trust in us will erode, we’ll struggle to get new business, we may get fined or sanctioned by state and/or federal authorities, we may get sued by providers or patients or both. My kids won’t go to college, I’ll lose my house, my parents will be disgraced.

- Acceptance. OK, let’s get to work. We have an obligation to our customers, our board, and ourselves to affirmatively take responsibility for our errors, be transparent with all stakeholders, manage the process with operational excellence, and share our lessons learned so that others can hopefully learn from our blunders.

I had to go through all five stages before mustering the energy to write this column.

Our mistakes

Last spring, one of our practice implementation specialists left a briefcase containing a company laptop in their car while they were at lunch. Someone broke into the car and stole the briefcase. We called the police immediately. Since the laptop was in a briefcase and the car was in a random neighborhood (i.e., not parked in a hospital or medical office parking lot), this was almost certainly a random theft and not a specific targeting of patient information.

Luckily for us, we had a fresh backup of the laptop, so we knew exactly what was contained on it. We are an implementation services company, not a provider organization, so you’d think we wouldn’t have any patient records on our machines. Wrong!

One of the things that we routinely do is assist customers with transferring patient demographic data from their old practice management systems to their new EHR/PM systems. And while most of this transfer happens machine-to-machine behind secure firewalls, there are always a number of “kick-outs” — individual patient demographic records that the new system rejects for one reason or another. Part of our job is to examine these error logs and work with our customers to determine how to remediate these rejected records. We usually do this on-site in a secure environment, but sometimes there isn’t enough time to do that during office business hours. In those cases, we copy these error logs onto our laptops and work them offsite.

These files shouldn’t add up to much, right? A file here, a file there, all of which are deleted once we’re through with them. Wrong again! Our forensic analysis of the laptop backup showed that it contained information on 14,314 individuals from 18 practices. On top of that, there were some paper copies of appointment schedules for another 161 patients, making the grand total 14,475. Fresh in my mind was a recent case in which Massachusetts General Hospital was slapped with a $1M fine and front-page headlines after an employee left the medical records of 192 patients on a subway. Uh-oh. I had to sit down.

The bad news kept on coming. In April 2010, we had instituted a company-wide policy requiring encryption of any files containing patient information. If the laptop or the files had been appropriately encrypted, this theft would not have been a breach issue. Turns out that we had been shopping around for whole disk encryption options to reinforce our security policy, but regrettably we hadn’t yet implemented a solution at the time of this incident.

It’s not that the data on that laptop wasn’t well-protected – it was. We are part of a professionally managed enterprise network environment, so our laptops have domain-level, strong usernames and passwords that are routinely changed and disabled after a pre-determined number of failed log-in attempts or if too much time elapses between network log-ins. Furthermore, the error log files containing the patient information were themselves password protected. In short, the chance that anyone would gain access to the information was infinitesimally small. This was a random theft, and it would take strong intent and some technical sophistication to get past the protections that were in place.

And yet … the files were no longer in our control and, without encryption, were indisputably vulnerable. I’d heard the term “my knees weakened” before, but had never experienced it myself … up until that moment, that is.

Our obligations

Privacy and security incidents of this type are governed by federal and state laws and regulations. The federal law is HIPAA and its HITECH modifications. The relevant Massachusetts state law is a recently enacted data protection law which went into effect on March 1, 2010.

We have terrific attorneys. Though we’re a small, non-profit company, we’ve never gone cheap on legal advice or insurance. I was thus surprised and somewhat distressed at how hard it was to disentangle the thicket of state and federal laws in play. Not because our attorneys are bad, but because state and federal regulations are imprecise, not well-aligned, and constantly changing.

Add to that the fact that the rules to implement the HITECH modifications are still just proposals and not final regulations yet, and what we were left with was a grab-bag of statutory and legal piece-parts that we ourselves had to assemble without any instructions or diagrams. For a really excellent description of some of the challenges in the current legal framework, see my friend Deven McGraw’s testimony before the Senate Judiciary Committee’s Sub-Committee on Privacy, Technology, and Law.

It was clear that this wasn’t something that we could just delegate to the lawyers while we went on our merry way. There were complex business and legal judgments to sort through, and the fact that it happened at all suggested that we needed to take a good hard look at ourselves and see what went wrong.

We immediately put together a small crisis team comprising our key customers, our attorneys, and from our company, me, our security officer and our customer project lead. We set up daily end-of-day conference calls to manage and monitor the crisis.

Our interpretation of the legal and statutory requirements was as follows:

Was this a breach? The files were unencrypted and contained individually identifiable information, so the answer was an emphatic “YES”, from both a state and federal perspective. In the case of Massachusetts law, a breach is when “personal information” is disclosed, which includes name plus any of SSN, driver’s license number, financial or credit/debit card account numbers. At the federal level, protected health information (PHI) includes “individually identifiable health information” that relates to health care status, treatment, or payment.

Who needs to be notified? State rules say that we had to notify patients, the state Attorney General, and the Office of Consumer and Business Affairs. Federal rules also require patient notification (within 60 days), as well as notification to the federal Office of Civil Rights. The federal rules (well, the draft federal rules) have an additional kicker, though. Breaches exceeding 500 individuals are posted on the OCR website (the so-called “Wall of Shame”) and the breaching organization must “provide notice to prominent media outlets serving the State or jurisdiction.” Yes, that’s right – tell the media about your goof-up and then become permanently enshrined on a government website to boot. Serious stuff. Very serious stuff.

How many ways are there to count to 500? One thing that I hadn’t fully appreciated was that just because an individual’s information was released doesn’t mean that it constitutes a breach. Under our state law, if the information is “publicly available,” such as address or phone number, it doesn’t count as a breach. Under federal law, it is a breach, but notification is only required in cases which “pose a significant risk of financial, reputational, or other harm to the individual affected.” In short, even though we had spilled information on over 14,000 patients, not all of them required notification, and from a federal perspective, not all of them counted toward the magic number of 500. Figuring this out turned out to be somewhat of a Herculean effort and much more art than science.

Who’s responsible? This would seem like a stupid question, especially since I’ve already said that we were responsible, but given that this is healthcare, we were given the unique opportunity to drag others down with us. This question gets into the relationship between covered entities (like physician practices) and business associates (like contractors who work for physician practices and need access to PHI in order to do their jobs.)

We were hired by a physician contracting network to help manage the EHR implementations of hundreds of their member practices. In HIPAA terms, the practices are the covered entities, the network is the business associate to each of these practices, and we were the contractor to the network with a “downstream” business associate agreement (meaning that we’re not connected directly to the practices, but only through our contract with the network.) Clear as mud, right? As complicated as this seems, this type of arrangement (and even more Rube Goldberg-like varieties) is NOT unusual, and indeed is very common and will become even more so as we move to accountable care and other types of complex business relationships.

It started to become clear as we worked our way through this that we were headed for a perverse outcome. Though we were the ones who made the error, we were acting on behalf of physician practices who were ultimately responsible for the stewardship of their patients’ information. Thus, in the eyes of the law, primary responsibility fell on them, not us. Indeed, as were only later to find out, there was some question about whether the federal Office of Civil Rights had any jurisdiction over us because we were a “contractor,” not a direct BA to the practice, and thus possibly outside of their reach.

Seriously??!! For those of you who are into the inside baseball of federal healthcare privacy law, the HITECH statute does not specifically give OCR authority over contractors, whereas OCR’s draft regulations do. Until those regulations are made final, we won’t really know whether they have such authority or not. (The final OCR rule is supposed to be issued very soon.) This ambiguity did not change our response or our diligence to comply with all state and federal laws, but in my opinion, this clearly points to a huge gap in the current monitoring and enforcement framework. OCR should have the authority to follow a data spill as far into the contracting chain as they need to go.

Our response

The days after the incident were a vortex. Everything, big and small, got shoved aside as we scrambled to assess the situation and develop our response.

The first thing we did was to notify our attorneys, our customer, my board chair, our staff, and our liability insurer – in that order. We needed our attorneys to get to work on understanding the situation and our legal requirements, and to also give us a high-level assessment of the possible scope of damage. Armed with that information, we notified our customer, making clear that we did not yet know all of the details or all of the legal ramifications, but that we took full responsibility and we would cover whatever costs and activities that resulted from our error.

At the recommendation of our attorneys, we also hired a private investigator to troll Craigslist and local pawn shops to try and recover the laptop. If we could recover it and show that it hadn’t been accessed in the time that it was out of our control, we’d arguably be in the clear. (Though NOT from our own internal investigation, which would have continued apace – just because we didn’t suffer the consequences wouldn’t reduce the gravity of the mistake.)

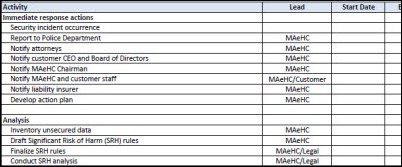

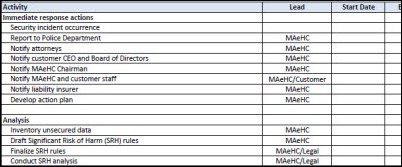

We had to get our arms around the problem, and the only way my linear mind knows how to do that is to put together a project plan. There were so many moving parts, and so many judgments to make, that we had to treat this just like any complex project that we manage on our business side. The project plan that we developed is here. The most intense activities on it were:

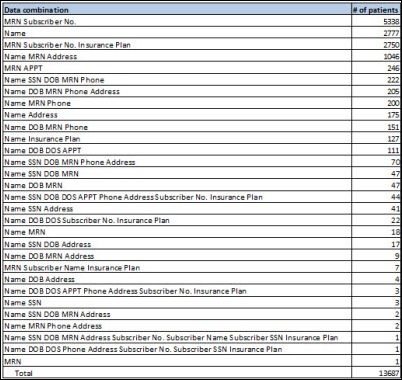

Determine what information was released on each individual. The individual information contained in the files had mostly patient names, but there were some providers listed as well. About 80% of the individuals were patients, but 20% were providers, the vast majority of whom were referring providers, not the providers from the practices themselves. We tend to focus on patient information when we think of breaches, but hey, providers are people too, you know. We also determined that of the 14,475 records, 688 were duplicates (i.e., records on the same patient), so we were able to reduce our count to 13,687.

Each individual record turned out to contain some combination of the following: name, address, phone, SSN, DOB, MRN, date of service, appointment, insurance subscriber number, insurance subscriber name, insurance plan. But it was a patchwork. No single record had all of these pieces, so we had a lot of analysis to do. Most of these categories were straightforward, but some needed further deciphering. One bit of good news was that the MRN in this case was not an active MRN but an obsolete one – it was the legacy MRN from the old system and would be replaced by a new MRN in the new EHR system.

Determine which patients would have “significant risk of harm.” I thought that this would be a concept well established in law or regulation, but it turned out to require considerable judgment on our part. As I noted earlier, these files were error logs from data migration, so they were not clean – the information on each patient was incomplete or corrupted in some way. In order to really determine the risk of harm, we were worried not only about the individual pieces of patient information that might have been disclosed, but also about whether a combination of innocuous data might be revealing when taken together. For example, a date of birth by itself is innocuous, however, DOB and name would obviously be a concern.

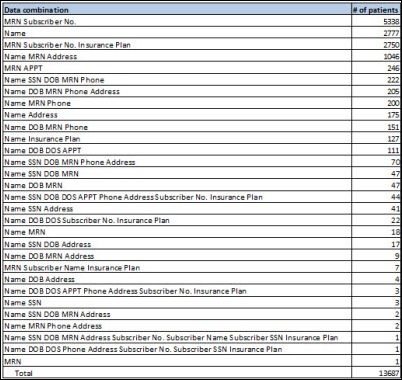

We had to do a detailed analysis of every combination of data, which revealed that there were 30 different combinations of the information above, meaning that each of the 13,687 individuals was represented in one of 30 ways. We created a frequency table showing the frequency of each combination. For example, we had 5,338 instances of MRN-subscriber number, 2,777 instances of name only, 222 instances of name-SSN-DOB-MRN-phone, and so on. The full table is below.

In analyzing this data, we were confronted with the dilemma of how to determine significant risk of harm. Federal and state rules have definitions, noted above, but it leaves a lot open to judgment. We decided that we would apply a simple rule based on the combinations of information that we had. Any individual who had their name PLUS either their SSN or DOB would be considered to have a significant risk of harm if their data was accessed. As it turns out, we had exactly 1,000 such cases. We determined that everyone else’s information was either meaningless without additional information (for example, MRN and subscriber number) or was already publicly available (for example, address, phone number, etc.) We thus concluded that of the 13,687 individuals whose information was stolen, 1,000 (7%) would have a significant risk of harm if their data was actually accessed, and 12,687 (93%) would not.

Obviously things were feeling a little better now that we knew we were dealing with 1,000 patients instead of over 14,000. Not that we took it any less seriously, but it started to feel more manageable and, of course, it meant that our legal and financial exposure was probably smaller as well.

Now that we knew what we were contending with, we could address the issue of notification. As I noted earlier, the question of who was responsible from a legal perspective was not as clear-cut as assumed. So, too, it was not immediately obvious who had to notify whom about what. Federal law is clear – it’s the responsibility of the covered entity, regardless of how badly their contractors might screw up. The state law does not say who the notification requirement falls on. While the presumption would be that it is on the party disclosing the data (in this case, us), the MA law is not specific to healthcare and thus does not delineate covered entity versus business associate responsibilities.

We were faced with yet another dilemma and decision. The state law would suggest that we (the contractor) should notify patients and the state government, whereas federal law says that the practices (the covered entities) should notify patients and the federal government.

Confusion. On the one hand, we wanted to take full responsibility for our mistake and shield the practices as much as possible from this. On the other hand, we did not want to confuse patients by having them receive one letter from the practice to fulfill the federal requirement and another letter from us to fulfill the state requirement. We similarly wanted to have consistent reporting channels to federal and state federal authorities to make it easier to respond to their enquiries.

Discussing this with our customers and our attorneys, we jointly decided that we should use a unified approach by which all notifications and reports would come from the practices. However, MAeHC would manage all the logistics of such notifications and reporting and would be prominently named in the reports and notifications. This allowed us to fulfill our desire and obligation to be accountable while at the same time keeping the process consistent and clean from federal, state, and patient perspectives.

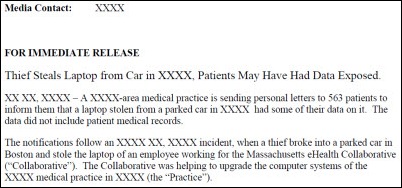

So how did this all shake out? We started with 14,475 records of patients and providers from 18 practices. Of these cases, 1,000 patients from seven practices were judged to have a significant risk of harm from the data theft. It turned out that of the seven practices, only one practice hit the magic 500, the threshold for being on the Wall of Shame and for media notification. So while all 1,000 patients had to be notified and all seven practices had to report the breach to the state and federal authorities, only one practice would face broad public exposure for 500+ individuals.

Having crossed the magic 500 threshold, this practice had to provide public notice of the incident through a major media outlet. The law provides some flexibility in determining the most appropriate media outlet. The practice, with our advice and helpful guidance from our media consultants, decided to provide notifications to the two largest television news stations in our state.

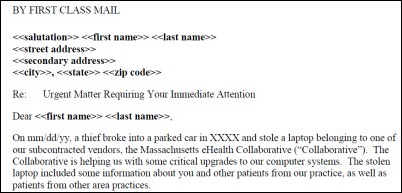

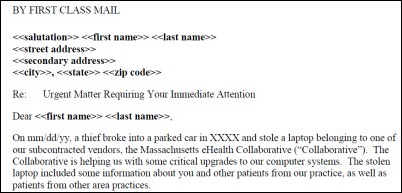

All seven of the practices agreed to have us manage the logistics of the government and patient notifications and reporting, so we gathered the letterhead information from all of the practices and printed and stuffed 998 patient notices and sent them by first-class mail. This itself was quite an exercise because the patient identification information was not always clean, so we had to visit each practice and manually confirm each patient’s address with practice staff. One practice had its own stationery that they wanted us to use. Another practice wanted to hand sign each notice. We had to build these special requests into our process as well.

We were unable to find valid addressing or contact information for two individuals, though we tried a variety of means (including those slimy Internet services that will sell personal information for a fee.) Yet another bind and another decision to make. Sigh. Federal law says that if you can’t directly contact over 10 individuals, you have to post the notice on the organization’s website or provide notice to a major media outlet in the area where the patient lives, whereas if it’s fewer than 10, you can provide substitute notice by an alternative form of written, telephone, or other means.

Our problem was that we couldn’t validate where these two individuals lived in the first place, which is why we hadn’t been able to contact them, so an “alternative form”, whatever that means, didn’t solve the problem. We could try to apply the rule for 10 or more, but the practice, like most small practices, doesn’t have a website, and we didn’t know where the patients lived so we couldn’t target media notices any more than we already had. We concluded that since we had already provided media notice to the two most prominent TV stations in our state about the breach, we had done all that we could. And as a practical matter, if we ourselves were having trouble identifying the two individuals despite our best efforts with all of the resources at our disposal, there was not much chance that anyone else could use the information to harm the individuals in question.

As an added measure to patients, we covered the cost of credit monitoring for any patient who chose to have that service. It felt like the least that we could do.

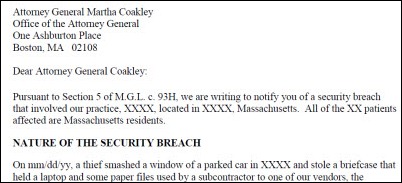

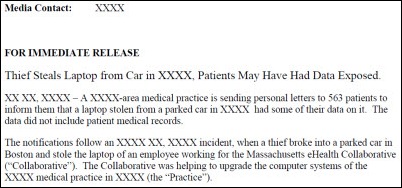

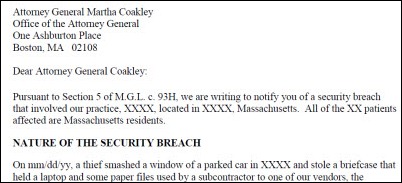

Our sanitized notification to the MA AG and OCABR is here, our sanitized patient notification is here, and our sanitized media notification is here. I can’t share the federal OCR notification because it’s all done on the OCR website and we therefore don’t have a copy.

What was the aftermath?

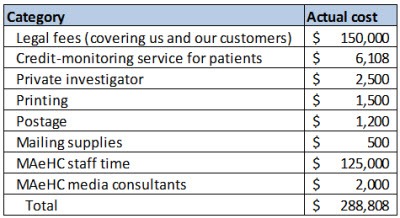

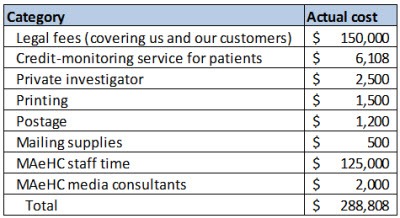

The hard costs of this incident were as follows:

As I mentioned earlier, we’ve never skimped on lawyers or insurance, and it paid off here. We’ve been paying $18,200 per year in insurance premiums for the last five years, and our insurance ended up covering all of the above costs except for our staff and media consultants’ time (for a total of $161,808) minus a $25,000 deductible. Of course our premiums will now go up going forward, to $23,000 per year with a $50,000 deductible. Though this reflects a calculation on the insurer’s part of higher actuarial risk, I believe that we are actually lower risk now that we’ve gone through this incident, but I’m not going to argue with them about that.

The soft costs of this are obviously the opportunity cost of the roughly 600 hours of staff time that we ended up throwing at this, as well as the time invested by our customers and the practices. The longer term impact on our reputation and the reputation of our customers is difficult to calculate, but nonetheless real.

Needless to say, the private investigator didn’t turn up anything. We filed all of our reports to federal and state authorities. We received follow-up questions from the state OCABR, and the practice received follow-up questions from the federal OCR. We paid for a separate attorney for the practice to represent them with OCR. In addition, we were asked to participate in a phone call with OCR to provide additional information and any learnings that would help other organizations better interpret and comply with privacy and security and breach notification rules, which we were happy to do.

Both the federal and state authorities acted immediately upon receiving our reports and were very responsive and helpful to our questions. It’s clear that they take these reports seriously and hold the privacy interests of our citizens as their highest priority. As a taxpayer and a citizen, I felt extremely well-served. As the party being investigated, I felt perhaps a little bit too well-served – just joking!

We and the practices received 18 calls from patients. It is a sad reflection on the times that almost all of the calls were to confirm the veracity of the letter notification — the patients whose data we had breached were concerned that our notification might itself be an identity theft scam!

Only 88 (fewer than 10%) of the affected individuals chose the credit monitoring option. Some people may never have read the letter, others may have just ignored it, and perhaps others are already receiving credit monitoring from other breaches of their information. (For example, I myself am currently receiving free credit monitoring from my credit union, which had a breach of credit card numbers last year.)

The media notices that we provided to the television stations were received (we confirmed that), but neither outlet aired the story as far as I know. A Boston Globe story published later after the Sony data breach listed a number of area breaches, including ours, but I’m not aware of any other media reporting on the story.

The one practice that hit the 500 threshold is listed on the OCR “Wall of Shame” website. I’m not going to point you to them because the error was ours, not theirs, and I don’t want to bring any more attention to them than they’ve already had to bear.

The reaction of our customers was concern (as expected) and incredible understanding (not expected). Soon after we had notified them, I addressed the board of directors of the contracting network to explain the incident and the actions we were taking in response. One director, a physician, expressed his concern that many physicians leave their laptops in their cars without realizing how grave the implications of a simple theft might be. The practices themselves were understandably focused on what harm might come to their patients, and what this incident might do to the years of trust that they had instilled in their patient relationships.

I was humbled by the sincere concern that each of the physicians expressed about their patients, and the understanding and generosity of spirit that they showed regarding our role in this mess. They would have had every right to scream at me – or worse. Most of them had only limited awareness of who we were because we were hired by their contracting network and, among the EHR vendor, the hardware vendor, the contracting network, and us, it was hard for the practice to tell any of us apart. And then, a mistake by us became their violation of federal and state law, undermined their relationship with their patients, and forced them to take time away from their lives to understand the legal thicket that they had somehow gotten snared in. I hope that if I’m in a similar circumstance I will show the patience, professionalism, and generosity of spirit that they showed to us.

My company took a number of remediation actions. Right after the incident, we immediately destroyed all patient data on all company mobile devices and temporarily banned any removal of PHI from a customer facility. We conducted a detailed workflow analysis of all of our practice-facing activities, identified the use cases in which our staff might have need for patient data, and provided detailed policies and encryption tools for them to easily do their jobs and still meet our security standards. The tools that we deployed are TrueCrypt, SecureZip, and ZixMail.

We bolstered our company processes to require formalized company authorization for individual personnel to have access to PHI on a project-by-project basis. We also held two mandatory trainings with all staff immediately after the incident. Only after all of this did we allow our practice specialists to resume their normal activities.

As for the employee who caused the incident, no, they were not fired. Indeed, our customer specifically requested that we NOT fire the individual because they valued the individual’s skill and expertise and believed this incident to be an accident. That is our company view of the situation as well. While there was obviously individual culpability for the incident, we also recognize that there is company culpability as well, going all the way to the top (i.e., me.)

As for me, I believe that we are a stronger company for having gone through this experience. I truly regret that it came at the expense of the disclosure of 1,000 innocent individuals’ personal information, and probably eroded, even if just a little bit, the trust between these patients and their doctors.

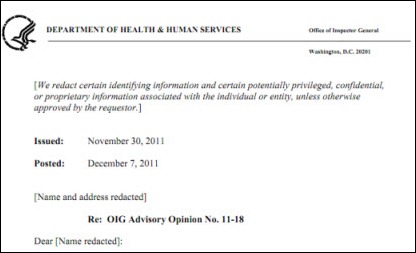

We very recently received a letter from OCR informing the practice that after having investigated the practice, its internal policies, the business associate arrangements, the patient and media notifications, and the credit monitoring service arrangements, they were found to be in “substantial compliance” with the federal Privacy and Security and Breach Notification Rules. And, according to the letter, the issues related to the complaint “have now been resolved through the voluntary compliance actions of the practice. Therefore, OCR is closing the case.” Roughly seven months had passed between the initial incident and this final letter from OCR. Hallelujah.

I hope that others can learn from our mistakes (I know I’m pretty sick of learning from my own mistakes and would be delighted to learn from yours!) It turns out that our incident was not that uncommon. According to the OCR database, over 30% of the 372 incidents involving over 500 individuals stemmed from theft or loss of a laptop or other portable device. And that’s only the reported incidents – who knows how many go unreported? I suspect that there have been WAY more than 372 such incidents over the last three years across the country, and as EHR penetration rises dramatically over the next few years, we can expect to see corresponding exponential growth in security incidents as well.

For what it’s worth, some observations and lessons learned from our experience are as follows:

- Whether you’re a physician practice or a contractor, look in the mirror (or use your phone camera) and ask yourself right now: do you know how the people on the front lines are handling personally identified data? Have you put in place the awareness, policies, and technologies to allow them to do their jobs efficiently AND securely? A meaningful self-examination will reveal that you are almost certainly not as good as you think you are (unless you’ve had a recent security incident of your own.) That was certainly true for me, and I suspect that I’m in very good company.

- Assume that your portable devices contain sensitive information, even if your vendor tells you that they don’t. Most EHR systems are designed so that medical records are not stored locally on a laptop, yet, in our investigation of this matter, we found plenty of instances of the EHR saving temporary files locally that were later not purged, or of clinical users saving documents locally because they weren’t aware of the risks. While there is no doubt that EHR software should be better designed, and EHR users should be better trained, I wouldn’t bet on it. Put in place policies and technologies as a safety net, just in case software and users don’t do what they’re supposed to (because that’s never happened before, has it?) I now have whole-disk encryption on my laptop even though I never work with practice-level data. Sure, it takes about 20 seconds longer to boot up while it’s decrypting. But rather than being an inconvenience, I actually have found that I use this time to take comfort that I’m responsibly protecting my company’s and my customers’ privileged information.

- If you’re in a physician practice, know who’s working in your practice and get a clear statement from the contractor at the outset of their work of what access to patient information they will need, and how they will be handling such information. As an industry, I fear that we are inadvertently letting business associate agreements absolve us from appropriate diligence of what our privacy and security protections are trying to accomplish. Hey, we’ve got a BA, so our contractors can do anything, right? You’re obviously not expected to be a privacy and security expert – that’s what you’re hiring. But understand that it’s your responsibility in the end if that contractor drops the ball on privacy and security, so it’s worth making yourself comfortable that they are reputable, diligent, honest, and competent. We are now providing all of our customers with a statement delineating what we expect to need access to and how we will handle such information. This isn’t a CYA exercise, because it doesn’t absolve us from any responsibility. It’s simply a vehicle to force acknowledgement of the seriousness of privacy and security, and to flag any differences in expectations at the earliest possible opportunity.

- Once a security incident has occurred, set everything aside and create process and structure right away to identify what’s happened, prevent any further incidents, notify your stakeholders, and get to work on meeting your legal, business, and ethical responsibilities (hopefully, these are all perfectly aligned.) Create a crisis response team with your attorney, your customer (if you’re a contractor), and your own staff. It’s hard not to panic (believe me), but laying out a plan will help to identify what you know and what you don’t know and will allow you to set your priorities accordingly. In my case, it just helped me breathe. Some guides that I wish were available when we went through this are available here and here.

- Don’t underestimate the effort that will be required to disentangle, respond to, and remediate the breach. If you’re a contractor, notify the organization who hired you right away to let them know the facts of the incident, the measures you are taking, and any immediate actions that they need to take (usually none.) There’s a temptation to wait to notify your customer until you know all of the facts and implications, but our experience is that that takes too long to disentangle. In our case, we notified the contracting network and its board immediately, who then decided to wait a little longer until we understood more of the implications for each practice before notifying each of the affected physicians directly. Good thing too – as it turns out, only seven of 18 practices had any legal liability for the breach, so it made sense to wait a little bit to sort this out. But realize we’re talking about days and maybe a couple of weeks – not months.

- Keep a daily log of your activities from Day One. Hours quickly turn to days which even more quickly turn to weeks, and someone will inevitably ask why you didn’t notify them sooner. Having a log of your activities will allow you to demonstrate that you responded immediately and provided notifications as soon as you figured out what exactly had happened and who needed to be notified about what.

- “Man up” and take responsibility for your actions. (Sorry for the sexist reference – I just think it sounds really good.) While we reported the incident to the police right away and immediately set the wheels in motion for compliance with federal and state regulations, I was struck by how easy it would have been to just let it slide, particularly as I contemplated the legal liabilities we might face, the financial penalties that could be imposed, and the loss of business that we might suffer. If we didn’t have a very recent backup of the laptop, we could easily have convinced ourselves that there were only innocuous error logs on the computer and stopped right there and reported nothing except a random theft. And we would have come to that conclusion honestly (for the most part.) I shudder at the thought of how many of these incidents go unreported each year, some perhaps not so honestly.

- Take responsibility as an organization. Bill Belichick, the coach of the New England Patriots (Yeah! Wahoo! Go Pats!!) says that an execution error by an individual is really a lapse in education by the coach. The simplest thing for us to have done (aside from not reporting the incident at all) would have been to declare that this was the action of a rogue employee, contain the investigation and remediation to that, and pat ourselves for another job well done. In our case, it became clear as we investigated this incident that there was a certain amount of “there but for the grace of God go I” among our entire staff, at which point we realized that this was an individual failing AND a company failing, which meant that ultimately it was a management and leadership failing. Framing it that way sent a strong message to our team that we’re all in this together and that we need to be honest, transparent, and professional about our flaws. It also led to our building system approaches that will be more long lasting because they were developed organically from the ground up with staff input rather than being imposed from above. Any security professional will tell you that building security considerations into routine workflows, rather than tacking them on as additional workflows, is not just a best practice, it should be the only practice. Or, to paraphrase what I heard from a yoga instructor the other day, we have to move from doing security to being security. Om.

In the end, I am incredibly confident that that laptop was either stripped down and sold or tossed into a dumpster. I am almost 100% sure that no one accessed the patient information on that machine. In my opinion, the penalties we paid for an honest mistake with very low risk (a random theft of a password-protected laptop containing a patchwork of demographic data) seem disproportionately high ($300,000 to us; national public exposure to the practice.) That said, I also recognize that we’re all feeling our way through this incredibly complex area, and an appropriate balance will eventually be struck, though it will take time.

Still, when all is said and done, I’m incredibly proud to be a member of a society that errs on the side of valuing the integrity of its citizens, as messy as that can sometimes be.

Micky Tripathi is president and CEO of the Massachusetts eHealth Collaborative. The views expressed are his own.

The article about Pediatric Associates in CA has a nugget with a potentially outsized impact: the implication that VFC vaccines…