G. Cameron Deemer is president of DrFirst of Rockville, MD.

Tell me about yourself and the company.

DrFirst pioneers software solutions and services designed to optimize healthcare provider access to patient information, improve the doctor’s clinical view of the patient at the time of care, and enable more effective, efficient administration and collaboration across a patient’s circle of caregivers.

Our growth is driven by a commitment to innovation and reliability across a wide array of service areas, including medication history and e-prescribing, secure messaging and clinical data sharing, and patient behavioral education and medication adherence. We are a Surescripts Gold Certified provider, and have the number one ranked standalone e-prescribing software as rated by Black Book Rankings. We are proud of our track record of service to over 40,000 providers and over 270 EMR/EHR/HIS vendors nationwide.

An estimated 50 to 60 percent of office-based physicians were e-prescribing last year, compared to 40 percent in 2008. How much of that increase can be attributed to government incentive programs?

For over 10 years, the medical industry has been relying on e-prescribing to increase patient safety by reducing errors and adverse drug events caused by illegible hand writing, drug-to-drug and allergy interactions, incorrect dosing, and duplicate therapy. The recent institution of federal legislation aimed at reforming the health care system such as the Medicare Improvement for Patients and Providers Act , PQRS, and the HITECH Act or Meaningful Use has made an impact on the overall adoption of e-prescribing technology in the medical space. In 2007, only six percent of physicians were using e-prescribing, and reports say 65 percent were in 2012.

It is important to note that despite these impressive gains in the medical field, the practice of dental medicine has not yet embraced e-prescribing, partially due to barriers such as misconceptions related to effectiveness given the relative infrequency of prescription volume and a relatively small set of frequently utilized medications, concerns regarding the cost of these solutions, an absence of e-prescribing functionality within many electronic dental records, and the lack of mandates from relevant governing bodies. DrFirst believes the next step for e-prescribing is to help the dental community realize the importance and benefits of e-prescribing to ensure patient safety as well.

Which companies do you see as your direct competitors and why is your offering is superior?

DrFirst doesn’t truly have any direct competitors in terms of apples-to-apples comparison. Beyond e-prescribing, DrFirst offers controlled substance eRx and adherence and patient engagement solutions directly to providers and EHR vendors and also has products that help hospitals and enterprises with medication reconciliation and management, an automated discharge summary, and HIPAA-compliant secure clinical data exchange solutions.

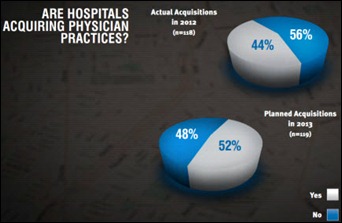

How is the shift from small independent practices to large groups or health system-owned practices impacting your business?

Part of our business continues to support smaller independent practices with affordable e-prescribing and Meaningful Use technology, attestation consultation services, and a variety of apps such as our free HIPAA-compliant Akario secure messaging and texting solution. But, we do not anticipate that this vanishing independent physician market will impact DrFirst’s business because we have diversified our product offering over the last eight years to also support the needs of enterprise level practices, hospitals, health systems, payers, and HIT vendors.

How does consolidation in the EMR vendor market impact your business?

Consolidation is a market reality and will continue to be so. What’s interesting are the opportunities being created for DrFirst as consolidation occurs.

When it comes to EMR vendors, the reality is the reasons these HIT companies decided to buy versus build and work with DrFirst are even stronger today. Scarce development resources to enhance core products, required development to meet Meaningful Use capabilities along with the complexity, effort, and ongoing costs to deliver full-featured e-prescribing are real challenges. And, it’s getting harder. For instance, continued changes to industry standards require ongoing development effort and cost while key functionality enhancements like electronic prescribing of controlled substances have introduced new complicated requirements and expensive audits to the market. Also, many companies struggle to deliver and enhance a full-featured e-prescribing system, leaving them with inferior solutions used by providers.

Consolidation leads to increased complexity. As consolidation occurs, it exacerbates these problems as multiple products must be managed in different code sets or an effort to consolidate e-prescribing solutions must occur. This is a big task on top of all the other important work to be done. We are seeing a dramatic uptick in qualified interest from companies dealing with these issues resulting from consolidation of companies who have managed their own e-prescribing applications and connections to industry networks. They increasingly understand the real difficulties and costs keeping up with e-prescribing standards, innovation, and managing 24 x 7 networks to proactively support prescriptions being written by their providers. There is a realization that the burden and opportunity costs are too great when DrFirst can do it for them. As a result, we have added additional EMRs and new customers to our integration services as their technology partner.

We are seeing success where others are not due to the options companies can select to align with their strategy and needs. They can white label our award winning e-prescribing solution, Rcopia, or use us as their “e-prescribing engine” that sits under-the-hood of their own e-prescribing user-interface. They control the e-prescribing look and feel for their users while we deliver on industry standards, enhanced functionality, and things like electronic prescribing of controlled substance complexities. It helps companies solve issues of getting to market quickly on a common platform that delivers full-featured e-prescribing, continued innovation to further differentiate capabilities, and re-allocating their internal product, development and operational resources to other priorities.

A number of key services in our solutions portfolio can be integrated by any HIT vendor allowing us to cut horizontally across the entire HIT industry regardless of whether they need an e-prescribing solution. This is a growing part of our business that is also recognized for the benefits discussed earlier when consolidation occurs as they can be delivered across many EMRs managed by a single HIT vendor as a common service.

How well is the market embracing e-prescribing for controlled substances?

For years the industry believed the inability to e-prescribe controlled substances – Schedule II to Schedule V - combined with DEA restrictions was the single greatest barrier to the broad adoption of e-prescribing. Many practices that prescribe large numbers of controlled substances avoided e-prescribing altogether because it fragmented their workflows.

We spent years working with the DEA and AHRQ in the research, development, and pilot phase of meeting the DEA requirements in order to remove this obstacle so providers could all benefit from e-prescribing. We experienced first-hand the many hurdles in getting to market with EPCS. After we successfully went through the difficult process of certification we soon we realized that the industry wasn’t aware that many of the barriers have already been removed.

Recently more EHRs have begun to partner with us to offer their physicians a high-quality, scalable, DEA-compliant, fully certified, audited, and low cost solution through EPCS Gold. Vendors no longer have to choose between competing development priorities because EPCS Gold allows them to greatly reduce development costs and effort, and eliminate the effort for audits, certification, and avoid day-to-day system operations, ID proofing and authentication of providers and ongoing security and compliance for constructing and operating a controlled substance e-prescribing system that meets DEA requirements so they can get to market quickly.

Since June we’ve seen a 25% increase in the number of pharmacies enabled to accept EPCS. This includes over 12,000 pharmacies in 38 states, including several major national chains such as Walgreens, CVS, RiteAid, and Osco. Interestingly some states have begun adopting legislation requiring real-time prescription monitoring in order to combat prescription drug abuse, such as New York state’s recent I-STOP law, which will make EPCS required by the end of 2014.

Are there new obstacles impacting your business today or do you see e-prescribing become more stabilized?

Although it is tempting to view e-prescribing as a stable technology, it actually continues to be very challenging for much of the vendor community. EHR and HIS system vendors are faced with ongoing issues including regular enhancement and certification by Surescripts and MU certifying organizations, complying with shifting e-prescribing regulations in the 50 states, developing and administering controlled substance prescribing capabilities – and then complying with the varying state regulations requiring to controlled drug prescribing, ensuring delivery of electronic prescriptions when the pharmacy network fails, and for hospitals, managing the required database maintenance required to effectively integrate with information delivered from ambulatory systems.

In many cases, workflows are inefficient, too, and are a low priority for improvement due to other development demands, such as MU stage 2. Many of these vendors have reached out to DrFirst to take advantage of our flexible e-prescribing platform in order to offload these concerns. We expect continuing growth in this sector as vendors seek outsourced solutions.

The article about Pediatric Associates in CA has a nugget with a potentially outsized impact: the implication that VFC vaccines…