The article about Pediatric Associates in CA has a nugget with a potentially outsized impact: the implication that VFC vaccines…

Pretzel Logic: The Quality Measure Conundrum 6/24/11

As many readers noted in my last posting on pain points of MU, quality measures are a separate and not very appetizing kettle of fish. Part of the problem is that policy-makers and users alike have been engaged in a bit of magical thinking – to wit, all we need to do is get docs using EHRs and the measures will basically create themselves.

My friend Dr. Marc Overhage used to compare this type of thinking to the now-defunct theory of spontaneous generation, which held that life could form from inanimate matter (hence the mice emerging from the pot of sawdust). That theory has been put into the dustbin of history. We need to do the same with the theory of spontaneous generation of quality measures.

Though Clinical Quality Measurement (CQM) is just one of 20 Meaningful Stage 1 requirements, in practice, this requirement has felt like a lot more than just 5% of the effort. Whereas one might have expected that the CQM requirement would basically be applying formulas to clinical data that’s already entered into the system as part of clinical workflow, it turns out that there is a fair amount of data that needs to be entered just to meet the measure requirements themselves.

In fact, a recent CSC analysis has quantified this extra effort, estimating that only about half (48%) of the data needed to drive the required CQM calculations would come from data already entered to meet other requirements. Put another way, the CQM requirement is, by itself, like a whole new set of MU requirements.

There are 44 possible measure options for MU Stage 1, from which you’ve got to submit at least six. Three of the six have to come from either the Core Set or the Alternate Core Set, and the additional three must come from the Additional Set, as described below:

- Three Core Set measures: hypertension, tobacco use/counseling, adult weight screening

- Three Alternate Core Set measures (for those for whom core set doesn’t apply): child/adolescent weight screening, adult influenza immunization, childhood immunization status

- 38 Additional Set measures: (EPs must submit three of 38 of these)

(PS — I realize that this barely makes sense, but people, I can do only so much with the material that’s been handed to me!) You can find the full list of measures here, a more clinically relevant list here, and very detailed descriptions that only a mother could love here.

So the MU Stage 1 thing may be adding some insult to injury by asking physicians to do a lot of documentation purely for the sake of measurement. Just what is this additional documentation? There seem to be four categories:

- Higher levels of documentation than suggested elsewhere in the MU requirements. For example, the e-prescribing requirement is that you only need to do it for 30% of your patients, and the requirement doesn’t apply to you if you have fewer than 100 prescriptions per year. However, any measure that relies on prescription information is going to demand that you e-prescribe for 100% of your patients.

- Standardized codification of documentation in ways not required elsewhere. For example, you are not required to have LOINC-encoded labs in your EHR, though your EHR is required to accept them if provided. Well, measures are defined using LOINC codes, so somewhere your local codes are going to have to be mapped to LOINC codes in order to automate measure calculation. Same is true for RxNorm, SNOMED-CT, etc.

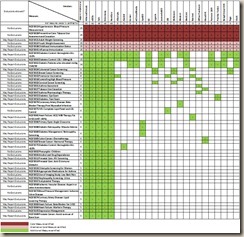

- Different types of clinical information than is required elsewhere. Medical history is not a requirement in any other MU requirement, yet many measures have look-back periods that may require entering structured history (such as the results of past screening tests or procedures). The biggest culprit in this category is exclusion criteria, which, if ignored, will go against the physician in most cases by incorrectly including patients in a measure denominator and thus giving the physician less credit than they deserve. While only two of the six Core and Alternate Core measures have some exclusion criteria, a whopping two-thirds of the optional measures (25 of 38) include some type of exclusions. (See table below)

- Different sources of information than is required elsewhere. Attribution rules may hold physicians accountable for activities that happen outside of their practices (eye exams or foot exams, for example).

All of this speaks to the need to have a deliberate and proactive approach to the CQM requirement because the CQM requirement asks physicians and EHRs to do unnatural acts. You would think that EHRs would be capable of such acrobatics – they’re just databases overlaid with user applications, after all – but alas, it’s not so simple.

We’re back to the common refrain that this isn’t solely (or even mostly) a technology problem. It’s a multi-step human and machine process and any misstep along the way leads to the same result – no data, or even worse, inaccurate and possibly misleading data.

We’ve found that giving careful thought to the following sequence is especially important to solving the quality measurement conundrum:

Getting data into my EHR

- What do I want to measure?

- What data is required to calculate the measures?

- Where do I get this data (i.e., is it documented in my office or do I need to get it from elsewhere)?

- Can I get this data into my EHR?

Getting measures out of my EHR

- Will my EHR reliably and accurately calculate the measures?

- Will my EHR allow me to validate (or at least sanity-check) the calculations?

- Will my EHR reliably and accurately generate a report of the measures?

- Will my EHR reliably and accurately (and securely) send the measures report to where it needs to go (e.g., CMS and/or state Medicaid agency)?

I will seriously overstay my welcome on HIStalk Practice if I go through each of these in great detail. The most important areas in my view are determining what measures you’re going to report on, developing a robust data acquisition and documentation strategy to support the numerators, denominators, and exclusions for your chosen measures, and finally, determining whether your EHR will be able to generate those measures. You may need to repeat this sequence a few times because your EHR may not be able to easily generate the measures that you originally chose (see table below).

Choosing measures that are most appropriate to your practice is the obvious place to begin. If your practice panel comprises more patients with diabetes than heart disease, choose those measures because you’re more likely to understand them and you may already be doing most if not all of the needed documentation.

Remember that it’s okay if none of the measures apply to you (including the Core and Alternate Core). Not even CMS is going to ask you to recruit patients to satisfy a quality measurement requirement! You are, however, required to submit zeroes as denominators as demonstration of the measures that don’t apply.

And for the optional measures, you can’t cherry-pick three that you know are zero (“Cool! My dermatology practice doesn’t have any chemotherapy, optic nerve evaluation, or asthma patients!”). If you submit zeroes for the optional measures, you are representing that you don’t have even a single patient who would be included in the denominator of ANY of the 38 optional measures.

You’ll want to do a data acquisition and management strategy examining the data requirements for the measures that you think you’re going to choose and then itemizing, one by one, how your office documentation approach is going to enter that information into your EHR. You’ll find that some transactions, like labs or eRX (assuming you’re doing it 100% of the time) will be a foundation for a lot of measures. A structured problem list (ICD-9 or SNOMED-CT) is the other cornerstone that, together with labs and eRX will get you a lot of the way there.

The exclusion criteria are the place to look for landmines. Don’t assume that you’re already collecting enough information to identify qualified exclusions, and don’t assume that a small number of exclusions means a small amount of work – you could easily find that 10% of the information takes 90% of the work.

For example, the numerator for beta blocker therapy for CAD patients with prior MI (NQF 0070) has a number of ways to document that the patient is indeed a CAD patient, did indeed have a prior MI, and did indeed have a beta blocker ordered or listed as an active med. But don’t forget that you need to remove from the denominator patients who have a pacemaker or have had an adverse event associated with beta blockers or any of 14 other extenuating circumstances that would indicate that the patient shouldn’t get this therapy.

Most physicians could go through this in their heads for any one of their patients, but if this process is going to be automated and scaled, it needs to be supported by clear and consistent documentation. By now we’re all familiar with the term Garbage In, Garbage Out. It applies here as well, but in the case of exclusion criteria it’s even worse – Nothing In, Garbage Out.

Finally, before you do all of the hard work to ensure that you have good information going in, wouldn’t it be nice to know that your EHR will be able to do something with the information? Certification provides some confidence, but frankly, not nearly enough. EHRs are required to calculate all six of the core requirements (core and non-core), but only a minimum of any three of the 38 additional measures. So, you could find yourself working hard toward a measure, only to find that your EHR isn’t certified to calculate that measure.

How much variation is there among vendors? More than I expected, actually. I took a non-scientific random sample of 25 vendors from the almost 400 that are certified for quality measures and found the following:

- Only 20% (five) of the vendors I looked at were certified already for the full set of 44 measures (athenaClinicals, e-MDs, Epic, Greenway, and NextGen).

- The remaining 80 percent (20) were certified only for the bare minimum of nine of the 44 measures.

- The three diabetes measures got a lot of vendor attention (at least half of all of the vendors were certified for them), but coverage of the remaining 35 measures was highly variable.

- Half of the 38 optional measures were covered by only the five vendors who covered all measures.

If you want the gory details, they can be found here (click to enlarge).

As a practical matter, this variation is not necessarily an issue in 2011 because it’s basically a paper attestation process, even on the quality measures, and CMS has said that you can attest to measures even if your EHR isn’t certified for them and that you are only responsible for what your EHR spits out – you don’t need to validate it.

That’s all well and good for a process that doesn’t require electronic submission and that isn’t actually using the measures for anything. Electronic reporting is supposed to start in 2012, however, and it’s hard to imagine that your EHR wouldn’t need to be certified for measures that are reported electronically. And even in 2011, you do have to report numerator, denominator, and exclusion for each of six measures and attest that this information was spit out by your EHR. If you choose measures your EHR isn’t certified for, that may not be a pretty process.

So even though you can attest to any measure regardless of whether your EHR is certified for it, that may only be a temporary reprieve until electronic reporting begins. And while you technically don’t need to care right now about what the measure results are, those results may matter more to you once they’re tied to performance incentives, so you’ll want to make sure your EHR is capable of meeting your needs and reporting requirements. With a new set of MU Stage 2 measures on the horizon, it’ll be even more important to know whether your EHR vendor is committed to developing functionality for the measures that you care most about.

Of course, the underlying issue with quality measures isn’t really about the documentation issues or the technical specifications of the measures or the capabilities of the EHRs. It’s about motivation — quality measurement (or any performance measurement, for that matter) is only valid when the users themselves care about it. We can do as much as want to ease the pain, but in the end, quality measurement will only be as accurate or reliable as physicians themselves want it to be.

Micky Tripathi is president and CEO of the Massachusetts eHealth Collaborative. The views expressed are his own.

“Hey Micky your so fine, Pretzel Logic blows my mind.” You know one of the best companions for your Pretzel Logic is beer. After a six pack I get the motivation to read your article and after a 12 pack and still counting, Pretzel Logic starts to make great deal of sense like the ontological conundrum of the “Who’s on First” paradigm.”

Great article with didactic codification – No, seriously! Just sobered up.

Great article! I’m wondering about the CQM requirements as they pertain to Clinics owned and operated by hospitals. Obviously the 38 measures are specific to MD practices and then hospitals have a set of 15 applicable to them, but which group do the hospital owned clinics fall into? It seems to me that they would have to meet the sub-set of 38 as applicable. But, if that is the case and the clinics use the same EMR as the hospital itself, I suppose that will present some extreme challenges for that organization since their EMR will essentially need to meet both sets of CQM requirements. Also, I’m curious about why you didn’t include Meditech in your list of 40 vendors reviewed (given they are one of the big names in many venues)? Is it because they don’t have a strong private practice utilization?

Great article! It certainly has me thinking more about reporting standards. Somehow along the way I am hoping we don’t lose sight of creative thinking. With all of the standards, consistencies and less thinking outside of the box, I would hope providers still go with their gut instinct in some cases and not just with decision support. Clicking override buttons and justifying their decisions by writing a novel of free text may not only CYA but could leave a provider second guessing their care. The real Meaningful Use, results in a provider successfully integrating technology without losing sight of his/her ability to diagnose and treat a patient. An EMR is a tool for them to use, nothing more, nothing less.

Thanks everyone for the great comments.

Re Alchemist: Thanks! (I think….) 🙂

Re The Confused: Great question. I was focused on EP measures and ambulatory EHRs (which is why Meditech was not included in my list but LSS was). If the hospital-owned providers do less than 90% of their services in a hospital setting (service code 21 or 23), they would be considered EPs (regardless of ownership status). If they are on a hospital EHR, you’re right, that could be a real issue with quality measurement (and other MU reqs) because the system they’re on wouldn’t necessarily be certified for the requirement set they’re supposed to meet. If they are on an ambulatory EHR, they would be no better or worse off than other EPs. My understanding of the history of this 90% interpretation is that it was made to fill a gap between EP and Hospital MU requirements. The Hospital MU requirements pertain specifically to inpatient and ER settings and thus implicitly assume that hospitals don’t provide ambulatory services, which is of course not true (and increasingly not true). Allowing hospital-employed physicians to be considered as EPs (under certain conditions) thus incents hospitals to pay attention to their ambulatory services as well as their inpatient services. That is, of course, assuming that their employment and payment agreements with their physicians allow the hospital-employer to get the MU payments that go to their EP-docs — many health center and hospital employment contracts never anticipated this type of issue, which is awkward, to say the least….

Re RN prn: You may have seen Dr Mostashari’s comment the other day that MU is a “roadmap to higher quality care”, not a “checklist”. As long as government dollars are involved we’ll continue to have this tension between wanting people to follow the spirit of MU (which I think the vast majority of clinicians agree with) but having to hold them to the letter (and font and size and color) of MU to assure that there is accountability to the taxpayers. As you suggest, it’s hard to keep the “roadmap” at the front of your mind when you’re being measured (and compensated and audited) on compliance with the “checklist”.