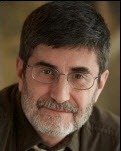

DOCtalk by Dr. Gregg 4/9/12

EHSD-Resolving RFP

Since I first broke the news about Allscripts’ sunsetting of my current EHR (Peak Practice) about a year and a half ago, I have developed a severe case of a newly defined malady: EHR Hunt Stress Disorder (EHSD). I am worn down, drug out, and generally pooped. I can’t figure anymore re: local host versus cloud versus disruptor / innovator versus corporate clout versus Quippe-able versus app-able versus templates versus NLP versus digital pens versus etc., etc., etc. I just can’t. I’m done.

I have seen a slew of systems — some great, some not so much. I’ve seen apps and clouds and cool tech. I’ve even had some had offers to work with some vendors. But, in trying to decide, I think I have run headlong into The Paradox of Choice wall. Too many options have led me to the paralyzingly dissatisfactional funk of EHSD. Can’t find that “just right” one.

To fight my current dis-ease, I need a differentiator. To help me try to alleviate the doldrums inherent in EHSD, I’m putting out a Request For Proposal for a new trench grunt-friendly, EHSD-resolving EHR BFF.

Here’s the deal on what I seek:

1. A new EHR and a new EHR partner

- I want a system that works reasonably well. It doesn’t have to do everything or look just exactly as I’d prefer…yet. (I’m experienced with the “let’s get from here to there” thing.)

- I want a company that wants someone who will contribute to their development and success.

- A must-have: a company that actually continues to care about small grunt-type clients after the check has cleared.

- I’d like a company that “gets” the future, but respects history.

- I need a company/system I can trust.

- It’s nothing personal, but I’m not looking to make you the next millionaire. I’m a small town solo pediatrician, pretty much the bottom feeders on the medical pay scale. I need a system that has a cost low enough with value high enough to actually deliver that ROI you all promise.

2. Transfer of data from my current EHR

- A must-have

3. Continuation of my current lab interface to Nationwide Children’s Hospital

- Not a deal breaker. Ohio will soon have this connectivity enabled via its HIE, CliniSync.

4. Practice Management compatible with an outside billing company

- Another must-have. I use an outside billing company (who accesses my current EHR) to whom I am exquisitely loyal. They have done some great things for me and are wonderful people. I adore them. (Not to mention that they have my AR turning every 19 days and 93% of all outstanding balances are less than 60 days.)

5. Submissions deadline

- Tomorrow (no matter what day you’re reading this).

6. Disclaimer

- All EHR vendors are eligible (except one).

Seriously, I’m tired of my EHSD. I’m looking to get EHR-healthy again. Whether we’ve spoken before or not, please submit your non-formal RFP (or questions) to doc@madisonpediatric.com.

(I’m sorta, kinda, not kidding.)

From the EHSD-weary trenches…

“Reality is the leading cause of stress amongst those in touch with it.” – Lily Tomlin

Dr. Gregg Alexander, a grunt in the trenches pediatrician at Madison Pediatrics, is Chief Medical Officer for Health Nuts Media, directs the Pediatric Office of the Future exhibit for the American Academy of Pediatrics, and sits on the board of directors of the Ohio Health Information Partnership (OHIP).

The article about Pediatric Associates in CA has a nugget with a potentially outsized impact: the implication that VFC vaccines…